Understanding Limitations of Current Reward Models

Although reward models play a crucial role in Reinforcement Learning from Human Feedback (RLHF), many of today’s top-performing open models still struggle to reflect the full range of complex human preferences. Even with sophisticated training techniques, meaningful progress has been limited. A major reason appears to be the shortcomings in current preference datasets, which are often too narrow, artificially generated, or poorly vetted. While some rule-based systems are effective for clear tasks like math or coding, they usually fail to capture nuanced human judgment. Moreover, common benchmarks like RewardBench are becoming less reliable indicators of real-world RM performance, showing poor correlation with downstream task success.

Challenges in Preference Data Creation and New Approaches

Creating high-quality preference data has traditionally relied on human annotators, but this method is time-consuming, costly, and sometimes inconsistent. To address this, recent techniques like RLAIF use LLMs to automate annotations, sometimes even outperforming humans. Newer approaches aim to combine the strengths of both by integrating LLM-generated data with human-verified labels. Meanwhile, reward models have evolved from simple scoring systems, such as the Bradley-Terry model, to more complex frameworks, including generative and direct optimization methods. Despite the availability of numerous robust open models and datasets, challenges persist in accurately capturing nuanced human preferences across diverse tasks and languages.

Introducing SynPref-40M: Large-Scale Human-AI Preference Dataset

Researchers from 2050 Research, Skywork AI introduce SynPref-40M, a massive dataset of 40 million preference pairs curated through a two-stage human-AI pipeline. Human annotators ensure quality through strict verification, while LLMs scale up data curation using human guidance. From this, they develop Skywork-Reward-V2, a family of eight reward models (0.6B–8B parameters) trained on a high-quality subset of 26 M. These models achieve state-of-the-art results across seven leading benchmarks, excelling in alignment, safety, objectivity, and robustness. The study highlights that success comes not just from data volume, but from careful, iterative curation that blends human expertise with AI scalability.

Scalable Two-Stage Human-AI Curation Pipeline

Current open reward models often suffer from overfitting to narrow benchmarks, such as RewardBench, which limits their real-world usefulness. To address this, the researchers introduce a two-stage, human-AI pipeline for curating large-scale preference data. Stage 1 starts with human-verified annotations to guide LLMs in labeling diverse preference attributes, followed by iterative training and error analysis to refine the reward model. Stage 2 scales this process using consistency checks between the best and a human-trained “gold” reward model, filtering reliable samples without further human input. This approach strikes a balance between quality and scalability, ultimately enabling the creation of tens of millions of high-quality preference pairs.

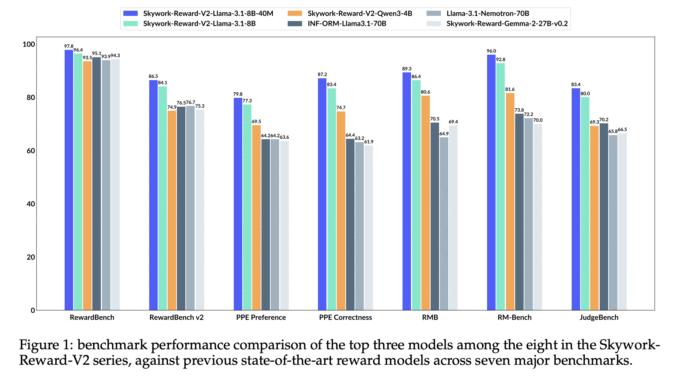

Benchmarking Skywork-Reward-V2: Compact Yet Powerful Models

The Skywork-Reward-V2 series demonstrates strong performance across multiple benchmarks, outperforming both larger models (e.g., 70B parameters) and emerging generative reward models. Trained using Qwen3 (0.6B–8B) and Llama 3.1/3.2 (1B–8B) backbones, these models achieve high scores on RewardBench, PPE, RM-Bench, and JudgeBench, with the best-performing variant (Llama-3.1-8B-40M) surpassing all others with an average score of 88.6. Despite smaller model sizes, Skywork-Reward-V2 models benefit from high-quality preference data (SynPref-40M) and efficient training setups, enabling them to generalize better in real-world RLHF scenarios. Notably, even mid-sized models like the Qwen3-1.7B outperform some 70B models, emphasizing the impact of training data quality and methodology over sheer parameter count.

Conclusion and Future Outlook: Scaling with Precision

In conclusion, SynPref-40M, a large-scale preference dataset built through a two-stage human-AI collaboration, combining human judgment with LLM-based scalability. Using a curated subset of 26 million preference pairs, the team developed the Skywork-Reward-V2, a suite of eight reward models (0.6B–8B parameters) that outperform existing models across seven key benchmarks. These models show strong generalization in aligning with human values, ensuring correctness, safety, and robustness to bias. Extensive studies confirm that both the data quality and curation method are key drivers of performance. Looking forward, the researchers aim to explore new training strategies, as reward models become central to LLM development and alignment.

Check out the Paper, Model on Hugging Face and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter, Youtube and Spotify and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.

Be the first to comment